Building tools for the real world.

I enjoy engineering projects because they are useful, challenging, and my favorite approach to learning.

Some of these projects serve as demonstrations that my research translates to real world problems; others simply make life easier. I find it important to publicly release and document them — it’s a small way to contribute back to the free and open-source community.

Some of my favorite projects are highlighted below, and a few more are available on GitHub.

Go to: [Software, Hardware, Sports]

Software

learn2learn 2019 - Now

learn2learn is a meta-learning framework built on top of PyTorch.

It provides practitioners with high-level meta-learning implementations, and researchers with low-level utilities to develop new meta-learning algorithms for the supervised and reinforcement learning settings.

It is in active development, and was the winner of the PyTorch Summer Hackathon.

You can install it with pip install learn2learn.

[code, learn2learn.net, preprint, presentation]

Cherry 2018 - Now

Cherry is a reinforcement learning framework built on top of PyTorch.

What differentiates cherry from other RL frameworks is that it does not provide any algorithm implementation!

Instead, it provides utilities to make it easy for researchers to implement their own algorithms.

It has been used in many settings (optimization, meta-learning, variance reduction) and is under active development.

You can install it with pip install cherry-rl.

[code, cherry-rl.net]

![]()

Plotify 2017 - Now

A library that wraps Matplotlib to simplify plotting in Python.

It provides a more intuitive objecti-oriented interface with sane defaults and best practices to quickly make beautiful and legible plots.

Also under active development.

You can install it with pip install plotify.

[code, website]

Randopt 2016 - 2019

Randopt is a Python package for machine learning experiment management, hyper-parameter optimization, and results visualization.

It is in active development and I – as well as others – have been using it for every machine learning project since November 2016.

You can install it with pip install randopt.

[code, randopt.ml]

Hardware

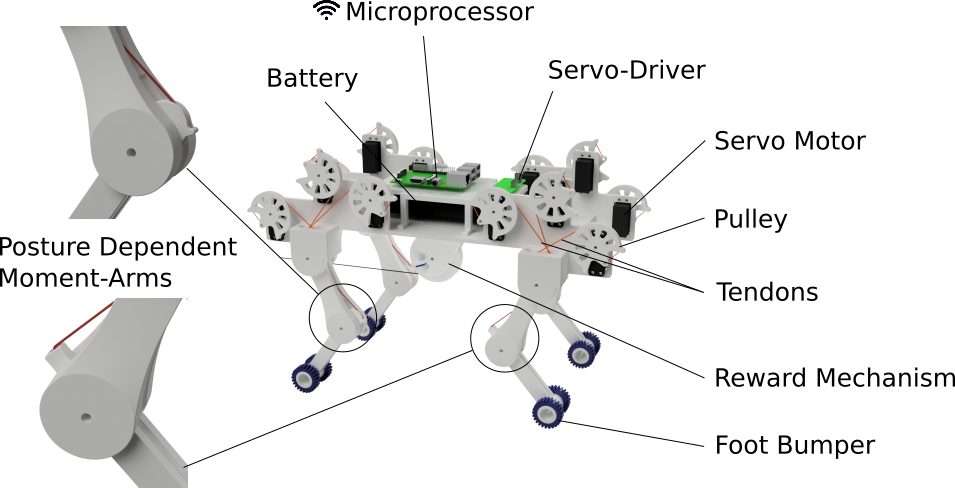

Kleo the Cat 2017 - 2018

In the Summer of 2017, Théo Denisart and I spent some time designing and programming a 3D-printed robotic cat for reinforcement learning, while at the ValeroLab.

What makes Kleo special is that its limbs are actuated via tendons – making it robust to failures but difficult to control.

Matt Simon brilliantly covered our work in his WIRED article.

[on WIRED, video, website]

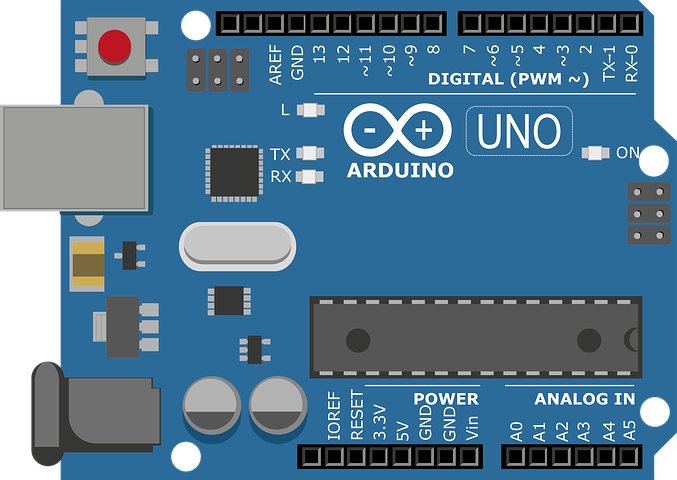

Sports Chronometer 2012 - 2013

Arduino-based wireless chronometer for racing sports (e.g., skiing, biking, etc).

Includes infrared signal modulation to avoid interferences with high altitude sun rays.

Lionel Rousseau took my code and designs, and deployed them on nice camera mounts and Arduino casings.

[code, video: early prototype, video: final result]

Sports

Tooski 2009 - Now

Tooski is the largest francophone website dedicated to the Swiss ski racing circuit.

On it, you’ll find news and blogs related to the Swiss Ski Team, the FIS World Cup circuit, as well as some younger skiers.

Tooski began as an adventure in merging two of my passions: computers and skiing.

[tooski.ch]